TL;DR = As visual artists confronting AI, we need to forge our own tools that let us exploit this new technology, instead of letting it exploit us.

We’ve all seen the self-proclaimed bellwether posts: ‘Photoshop is dead’, ‘Goodbye concept artists’, ‘Who needs copywriters now?’ We’re being inundated with stories about AI and how it will simultaneously revolutionise the creative world and wreck the careers of everyone in it. But to me, as a digital artist working in visual storytelling, these extreme takes ring hollow.

Is AI going to change the way we work? Probably

Is AI going to change who we are as artists? Not at all.

Behind this is my assumption that those of us who enjoy crafting imagery aren’t about to pack it all in and let machines do our work. Instead we have an important job to do. We need to forge our own tools that let us exploit the new technology, instead of letting it exploit us. It’s crunch time. Read anything about AI image-making these days and you’d be inclined to think that it all boils down to two options: you either let AI completely replace the way you make images and moving pictures (by using full frame text to image tools such as Midjourney) or you begrudgingly allow AI tools to act as co-creators embedded in your software (as with the AI image extension tools in Photoshop for example.) I’m grateful for some of these advances. A tool that can strip unwanted objects out of a shot and create a clean plate is a godsend. But I draw the line when it starts inventing its own content. Unless you enjoy letting a machine make all or most of your creative choices, using AI this way feels like a compromise. But there are other ways, where we still keep creative control but also get a lot of new power.

So let’s start with something really simple. I recently made a tool for creating colour palettes using natural language. The user just types in a colour description. It can be anything from stating an actual colour to completely subjective prose. The tool doesn’t mind. It just comes back with a response: a ramp of five colours that sit nicely together. If you like it, you can use it. If not try something else. The AI behind this is the large language model (LLM) ChatGPT. While ChatGPT has a reputation for being less than truthful, it’s a great tool for converting natural language - the way we speak - into structured data like the numbers that describe colours in digital images. The subjectivity doesn’t matter. Colour preferences are subjective anyway, right?

Using LLMs as language-to-data systems in VFX is, to me, an under-appreciated resource. A lot of the drudgery of looking up values can simply be eliminated. What’s the Index of Refraction (IoR) for Perspex? What are the best values for a procedural noise mask for a rusting iron material? Instead of digging out these numbers through searches or trial and error, we can just type in these descriptions in the relevant part of our software and an LLM will plug in numbers. The great bonus is we maintain control. The system populates our setups with the data and values we request and then we can adjust them as we see fit. As digital artists, we’ve trained ourselves to see the world the way a computer does: as numbers. Using an LLM this way actually lets us go back to describing the world as place of colour, form and texture.

In terms of tools, these are quite low-level. They perform small, specific functions, but can be very powerful when used together. They’re the hammers and chisels in our toolbox. But what about the higher-level tools? What’s the compound mitre saw in this world? I guess that depends on where you sit within a creative team or pipeline. Personally I’m looking at ways to reduce some of the heavy lifting in 3D VFX work and let me focus on the story I want to tell. One toolset that’s peaked my interest is called MLOPs. Developed by Bismuth Consultancy and Entagma, MLOPs brings the power of machine learning tools into my go to 3D application, Houdini.

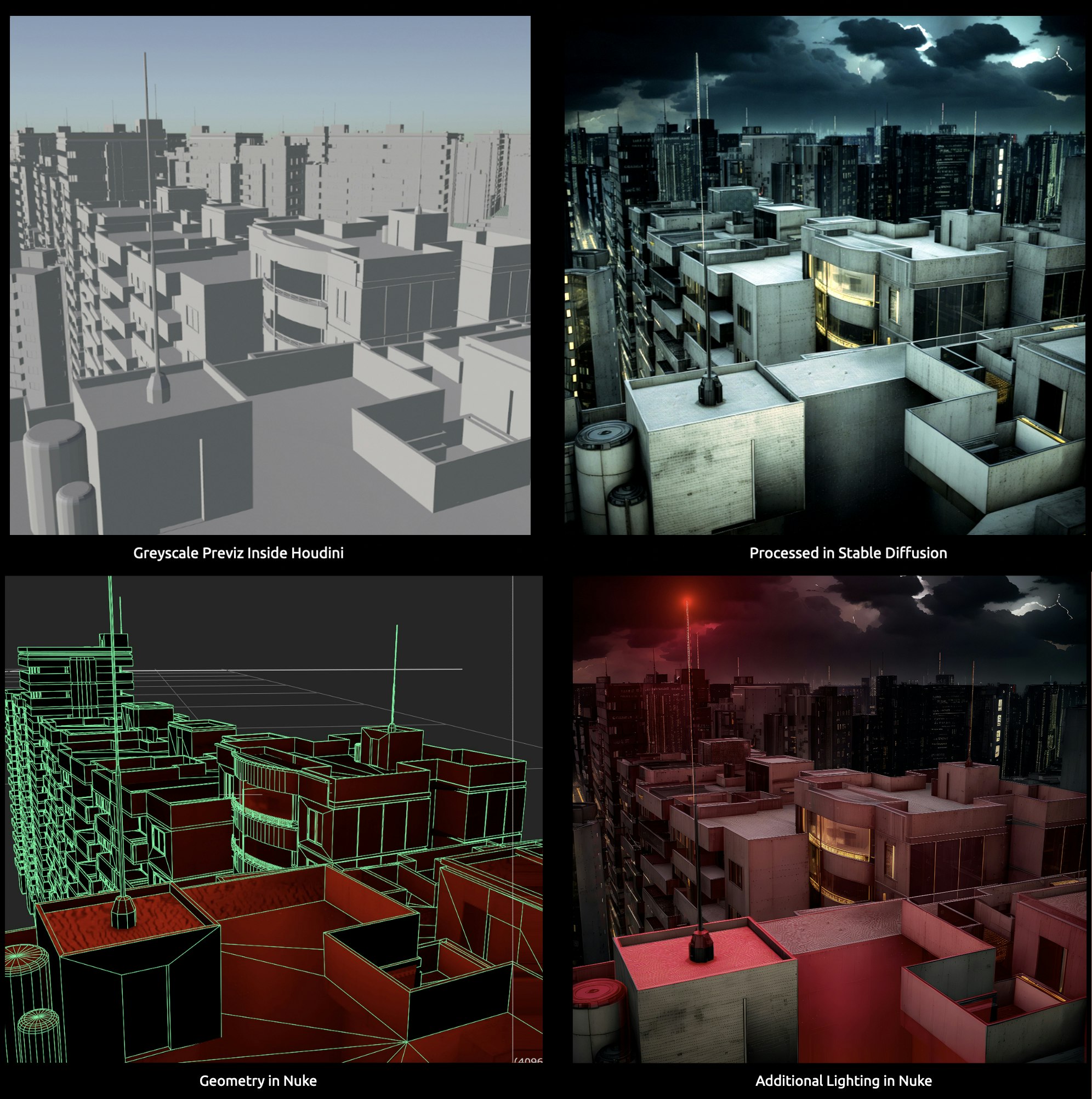

Right now, MLOPs’ main AI engine is Stable Diffusion, an open source machine learning system for making images. At first glance Stable Diffusion could be mistaken for Midjourney, but with slightly less successful output. In reality Stable Diffusion is a much more open system and gives users a lot of interactivity. As well as text prompts, users can feed a broad spectrum of information into Stable Diffusion to guide it. The main route into this is ControlNet. ControlNet allows users to translate guide imagery into specific image formats that Stable Diffusion can understand. For example, working within MLOPs I can build a 3D scene with simple low-detail objects, setup a camera and lens, and then rasterise that scene into images that represent standard 3D render passes such as normal, depth and segmentation maps which identify specific objects within the scene. There’s no conventional rendering in this. MLOPs flattens the scene into passes in realtime. ControlNet can read these passes and convey them as guide information into Stable Diffusion. So suddenly I’ve got an AI image generation system where I control camera, layout and content all working inside the familiar working environment of Houdini. Likewise, I’ve still got all the benefits that come with the actual 3D scene I built. I can move my camera, shift objects around, change the lens - all the subtle control that we typically give up when working with a full frame AI system like Midjourney. Finally I can pass everything from my original 3D geometry and camera, the ControlNet passes, and the Stable Diffusion image output into Nuke, where I can project the AI generated texture back onto my 3D scene and play with lighting and compositing effects. In other words, I’ve not changed my 3D pipeline to accommodate AI. Instead MLOPs has brought the AI tools into my pipeline. A massive difference.

With Stable Diffusion, this is just the tip of an ever-growing iceberg. Being open source, the capabilities of Stable Diffusion are growing at a dizzying pace, mainly thanks to ControlNet and it’s many outputs.

Another valid criticism of AI tools like this is the likelihood that the models were trained on other artists’ material, without their permission. Using art generated this way may or may not legally constitute theft. We’re waiting for the courts to decide that. But as image-makers we need to be conscious of our role in the creative ecosystem. We’re here to invent, not steal. Stable Diffusion does offer one mitigating option: we can train Stable Diffusion models on our own artwork, or work we’re legally entitled to use. Studios or design houses with their own look and even a modest size library of their own images can create their own Stable Diffusion model in less than 24 hours. And MLOPs includes tools to simplify this. This is essential at studios, such as my employer, if we’re to have any chance of verifying the prominence of our creations. While we can’t control how the base Stable Diffusion model has been trained, we can still make it clear that the imagery we’re creating used a model trained on our own body of work, along with scenes we’ve crafted and compositing done by our artists. As we contemplate using these tools on paid work with brand and agency clients, we’re sharing the process with them at every step. It’s essential, morally and legally.

That’s my take. I’d love to hear your thoughts, especially how you’re working with AI tools like this.